Magnetic Structure Construction with the PR2 Robot

Abstract

While robots have for years welded, cut and manipulated materials on carefully calibrated factory floors, robotic manipulation in less constrained environments such as homes or offices is a field of open research. Everything in the unstructured environments from lighting to object pose is subject to vary from one manipulation attempt to another, requiring a significant robustness in robot’s manipulation algorithm. Once robust object manipulation is commonplace outside of a factory setting, a whole myriad of opportunities for humanoid robots are unlocked from cooking to cleaning to construction. This project will explore a robotic manipulation task that a two year old child could accomplish with little difficulty, but proves to be less than trivial for the PR2 robot. Wooden building blocks are classic toys for toddlers to tinker with, allowing the child to exhibit a high degree of perception and dexterity when constructing towers of blocks lain on top of one another. This project is an attempt to program the PR2 robot to observe a table, identify magnetic blocks upon the table plane, and manipulate those blocks into structures. Having run into several roadblocks in vision and manipulation along the way, these goals were not obtained. Despite that fact, this paper will discuss the methodology behind the attempted implementation, as well as the difficulties and successes encountered along the way.

Introduction and Related Work

The goal this project set to achieve was building structures using the PR2 robot. To make the process of building structures more manageable for the robot, magnets within the blocks aided in connecting block pieces together. Long gray bars, fitted with magnets of the same polar direction, attach snugly together with black cube blocks. Thus the PR2 need only orient the blocks properly with respect to a corresponding opposite block and hover closely in order to achieve a block to block connection. To properly manipulate these structures it was necessary to determine which of these bar and cube groups a particular block fell under and then estimate the objects position. This required assessing the error inherent in the perception of the objects on a tabletop surface. After trying many attempts to bring both bar and cube objects together to form a connection with no success for reasons that will be elaborated upon later, the project scope was modified to sorting the objects into their respective groups on separate sides of the workspace table.

Figure 1. Magnetic Blocks to be detected on a tabletop

The PR2 Robot

The PR2 humanoid mobile robotics platform, developed by Willow Garage, brings the robotics community is one step closer to a robot capable of preforming tasks in unstructured human environments. The PR2 is a two arm robotic system with seven degrees of freedom in each arm not including a one degree of freedom end effector. While the PR2 also comes with a host of sensors, this project only made use of the sensors required for the tabletop detection package such as the Narrow-Angle Monochrome Stereo Cameras, along with the LED Texture Projecter which triggers with the cameras. Also, joint encoders and the Tilting Hokuyo Laser Scanner were utilized for motion planning and collision prevention respectively [3].

Figure 2. The PR2's sensors utilized for this project

In addition to constructing the PR2 robot, Willow Garage also created an operating system’for their robot, the Robot Operating System or ROS. This operating system allows the robot to ‘subscribe’ to messages sent by each of its sensors via that sensor’s node, make decisions based upon that input, and ‘publish’ messages to its actuators. This is a an oversimplified representation of ROS, and a more detailed explanation can be found at ROS.org/Concepts [4]. In addition to running code on the actual PR2 robot, ROS supports simulation of the PR2 via the 3D simulated dynamics engine Gazebo. Everything in virtual environment functions the same as on the real PR2 except that data is provided by object files dropped into Gazebo rather than real objects.

Related Work

Over the past decade, there has been much work toward exploring manipulation of household objects in the field of robotics. For instance Ashutosh Saxena at Stanford University, explored a purely vision based algorithm for grasping unknown objects without 3D models. A training set of 2500 real and synthetic items were used in order to give their robot a vast variety of potential grasps for unknown objects. However, instead of using dense stereo vision such as the case with the PR2, only a sparse set of points are required to plan a successful grasp for an unknown object [5]. Willow Garage itself has published papers regarding manipulation and the PR2. Most notable manipulation tasks included autonomous door opening and the PR2 plugging itself into a wall socket [1]. These examples required some of the same software packages utilized by this project such as move_arm and the gripper_action. Perhaps even more relevant, employees of Willow Garage including Kaijen Hsiao and Sachin Chitta published a paper on Contact-Reactive Grasping of Objects with Partial Shape Information. This paper details the implementation of the PR2’s grasping controller when only a point cloud perceived and no model is fit to the object data [2].

The GRASP Lab’s own Nick Eckenstein, a Mechanical Engineering PhD student, has tackled the very problem of constructing magnetic bar and cube structures. By avoiding perception and controlling exact joint angles for cube-bar grasping at a designated location, as well as previously attaching cubes to the bar blocks, Mr. Eckenstein successfully managed to build a cube structure out of the same magnetic blocks that were used in this project. As a design choice, the project this paper presents relies more upon perception and higher level position control in an attempt to fulfill the goal of a robot working with household objects in an unstructured environment.

Approach

In order to tackle this rather large project of coaxing a 400 lbs, 7 DOF humanoid robot to construct magnetic blocks, it was necessary to break the problem down into smaller parts. The larger subcategories consist of object detection and manipulation. While the two parts can be somewhat decoupled, they are undoubtably related, with errors in detection persisting through toward manipulation. For now, the analysis will focus on the decoupled portions.

Object Detection

Anticipating a large need to detect objects laying on a tabletop plane, Willow Garage implemented the Tabletop Object Detector for use with their Manipulation Pipeline. This object detector segments tabletop surfaces utilizing the PR2‘s Narrow Stereo Cameras to attain object point clouds. The tabletop itself is determined by a RANSAC estimation of the most dominant plane perpendicular to the Z direction in the image. Clusters of points not fitting into the plane are returned as objects. These clusters would normally be fitted to a model in Willow Garage’s Object Database, thus giving a detailed 3D mesh of the object and preferred grasp orientations. However, the magnetic bars and cubes used in this project did not match any object in this database. As such, the Tabletop Detector only returned point clouds representing likely objects.

The PR2 robot consists of dozens of framed coordinate systems which are defined at all times by the TF package. This project used the ‘base_link’ coordinate frame to define the PR2’s world. Therefore, whenever an object was detected and returned from the Tabletop Object Detector, its position XYZ and orientation XYZW was defined with respect to the base of the robot (that is, the center of the base, 5 cm above the ground). The beauty of this TF package is its ability to instantly convert between any two coordinate frames just by requesting a particular frame from a TF listener and passing in the current frame as input.

Since the Tabletop Detector was unable to fit the magnetic objects to any model in its database, the point clouds return from the Narrow Stereo Cameras are clustered into a cohesive objects. In order to make a rough object centroid measurement from these point clouds, the average of every point of in the cluster was taken. This centroid would give a rough target point for grasping to feed into the Manipulation Pipeline. Before an object could be grasped, however, it had to be identified as either a cube or a bar.

Figure 3. The PR2 robot detecting the centroids of tabletop objects in RViz

The object identification process was achieved by kMeans clustering. Each detected object was fed into a pythonic implementation of the kMeans algorithm with point cloud size as their features. After fifty iterations, two dominating clusters should emerge. One for cubes, and the other for bars. Other methods such as Perceptron, and Linear Regression were considered for grouping the items, but kMeans was decided upon to give a clear indication of clustering failure. If the clustering between groups of objects failed by returning only one cluster of objects, a new random seed was generated for the kMeans algorithm, and the process was run again. This was repeated up to 100 times until a satisfactory clustering was attained. Then, to ensure the robot knew which grouping corresponded to the magnetic bar and which to the cube, the groups were reorder so that the largest clusters always had the largest group number.

Object Manipulation

With a seven degree of freedom robotic arm, and several levels of arm controllers to choose from, the first task in manipulation was to determine which controller made the most sense to manipulate the magnetic blocks. At the lowest level, ROS has a default implementation of a joint controller which could move the arm to any desired joint angle, but requires the Inverse Kinematics to be computed for each movement in the 3D base link coordinates.

To avoid unnecessarily writing an Inverse Kinematics controller, the next package to be considered was the move_arm package. move_arm takes an XYZ position and XYZW orientation for any joint frame in the arm (even the tool frame at the end effector) and plans the Inverse Kinematics required to get that frame to its desired position, within the given orientation constraints. The move_arm package utilizes the Tilting Hokuyo Laser Scanner to prevent any collisions in the trajectory. Due to this collision avoidance, the move_arm node cannot by itself grasp an object, because collision is inherent in grasping an object. Therefore move_arm is used to get the PR2’s arm into the correct position within centemeters directly above the soon-to-be grasped object, and the pr2_gripper_grasp_plan_cluster needs to be called to plan the grasp. Finally, before the grasped object can be moved to a new location, a model of the object must be ‘attached’ to the robot’s end effector frame to prevent move_arm from returning a collision state with the grasped object.

While each of these controllers were initially tried separately, it became apparent that Willow Garage’s implementation of Manipulation Pipeline was more user friendly. The Manipulation Pipeline abstracts away the direct calls to move_arm and the grasping controller’s GraspPlanning service calls. So, it was decided the most straightforward approach to manipulating objects was to build upon the “pick_and_place_controller” which operates directly off of the Manipulation Pipeline.

This project’s implementation of a pick_and_place_application, starts by calling the Tabletop Object Detector as described in the above section. Then after obtaining the object centroids and clustering the objects into respective groups, the Collision_Map_Process is called to add the Tabletop Detected Objects to the current collision map as ‘Graspable Objects’. Any object added to this list can be requested to be grasped. The pick_and_place_controller will call move_arm and subsequently pr2_gripper_grasp_plan_cluster under the hood to make the planned grasp a reality. Placing the object back on the table then requires a new call to Tabletop Object Detector and subsequently the Collision_Map_Process to determine the allowable regions for an object to be placed.

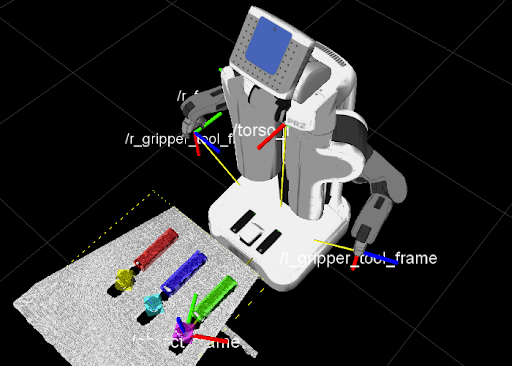

Figure 4. The Collision Map fitting a bounding box to objects in RViz. Also, the TF frames are labelled for the torso and each end effector

Difficulties

Backing up to the original task of placing one magnetic object on top of another, the collision map proved to be troublesome in preventing collisions between the object held in the end effector and graspable objects on the table. Simply attempting to place a bar on top of a cube resulted in states of collision, thus preventing any movement. One potential solution to this problem was to remove the offending object from the collision map, which then resulted in the object becoming part of the table’s collision map, and still returning a state of collision.

Object alignment was another cause for difficulty, in that the pick_and_place_controller abstracted away the pose of the object in the end effector. To discover an object’s orientation, diving into the pr2_gripper_grasp_plan_cluster is required, making it difficult to reconfigure the orientation in which the PR2 would place the object on the table. Placing the object back onto the table in the same orientation in which it was grasped seemed to be the underlying assumption for the pick_and_place_controller.

Figure 5. The Pick and Place Controller grasping an object in RViz

Object Sorting

Despite trying to overcome these difficulties by reimplementing lower levels of the grasping controller, these issues remained unresolved, and caused the project to shift in a new direction. In order have something to show for the effort that was put forth to understand the rather complicated ROS Manipulation Pipeline, a more manageable goal was set: to organize the magnetic block objects in front of the PR2 robot. Ideally this would demonstrate the ability to differentiate two groups of objects as well as the ability to manipulate those objects to desired positions.

Figure 6. A diagram of the block sorting algorithm's workspace

The first step in organizing the magnetic objects was to identify the blocks using Tabletop Object Detection and the subsequently implemented Centroid Detection and kMeans clustering described in the Perception section above. Then, with the objects identified into two groups, each object in the centroid list will be requested to be grasped with the end effector closest to that particular object’s desired location (See Diagram Below). The right end effector will first attempt to grasp the magnetic bars, and the left end effector will attempt to grasp the magnetic cubes. If the Grasping Planner returns that a particular grasp fails, it attempts the grasp again.

Should the Grasping Planner return that it is unfeasible for an object to be grasped by a particular end effector, it must be out of the desired end effector’s reach. In which case, the object is to be grasped by the other arm. Upon successful grasping with the other arm, the block is then placed into the ‘Transfer Region.’ The Transfer Region is a space accessible by both the left and right arms. Then the arm closest to the desired location for that object (ie. the other arm) is called to grasp the object again.

Now, with the cube or bar in the desired hand, the block is then placed with other sorted objects in any available location within a .5 meter by .5 meter square on the tabletop. This process is repeated for every object initially detected in front of the robot.

This reduced task set was much simpler due to avoiding collisions between objects, and playing upon the strengths of the detection and sorting algorithms. Unfortunately, this also failed yield a successful test run due to difficulties bringing up the collision detector on the real PR2 robot. Also, despite the Tabletop Detector finding objects on the table, the collision detector failed to add these objects to the GraspableObjects list resulting in an empty list of objects to be grasped.

Experiment Results and Analysis

With a system as complex as the PR2, it became apparent that the initial goals of this project could not be met within the time allotted for this project. As such, the results and analysis of this project will focus on what was accomplished: the object detection, centroid estimation, and clustering of magnetic block objects.

Results

While the estimation of centroids for the magnetic tabletop objects was fairly robust for simulated Gazebo data, the results were less than optimal for real PR2 data. This is largely due to the fact that the default Tabletop Object Detector failed to perceive the cube objects upon the table and register them as Graspable Objects.

Figure 7. From left to right: Simulation Test One - 6 centroids, Test Two - 6 centroids, and Test Three - 5 centroids

Figure 8. From left to right: PR2 Test One - 3 centroids, Test Two - 2 centroids, and Test Three - 3 centroids

Analysis

The centroid detection algorithm hinges upon the Tabletop Detector returning useful point clouds containing objects of interest in order to accurately assess where the object centroid lies. That being said, if the Tabletop Detector fails to return points for a given object or if two object are grouped together, then the centroid detection results will be off. Although when the objects are spaced far enough apart and the Tabletop Detector recognizes them as objects via returning corresponding point clouds, the centroid detection and subsequent grouping of the point clouds tends to correctly predict an object’s centroid and object type.

In simulation trials 1 and 2, the objects are spaced 20 cm apart which appears to be far enough for the Tabletop Object Detector to correctly segment their point clouds, and thus six centroids are correctly estimated and grouped. However, in simulation trial 3, the blocks are only spaced 10 cm apart causing the Object Detector to group a bar and cube together as one object. This creates one very large object. The larger object group always has a green sphere centroid, whereas the the smaller group will have a red sphere. In simulation trial 3, bars and cubes are grouped together because the one massive bar-cube combo was grouped by itself. The centroid of that large bar-cube object is the midpoint of the centroids of the each individual object.

As with any real system, on the actual PR2 robot the data becomes less accurate and more error prone. In real trial 1, only three centroids are detected despite four objects being present. The two bars are seen and grouped together, and the one cube is detected and subsequently grouped with itself. However, one cube is completely missing from the Tabletop Detector scan. The same result occurs in real trial 3 but two cubes are ignored this time by the Tabletop Detector. Trial two is a very interesting case as the only two objects detected are the bars. These bars would typically group together, but since the algorithm forces two groups upon the scene, each bar is grouped separately despite the small difference in size between them.

Future Work and Conclusion

Not having met the goal of manipulating magnetic blocks into structures, the next ambition for the project is to pick up from where this paper leaves off in manipulation. Firstly, the block detection task will be simplied by brightly color coding the small magnetic cubes red, which the Object Detector previously had difficulty seeing, as well as the larger bars green. This workaround will allow for the real PR2 to detect the objects and locations as reliably as the Gazebo PR2.

With the objects being reliably detected and subsequently grasped, the next step will be to extract from the Pick and Place Controller the orientation of the magnetic bars after they are in the PR2’s gripper. By rotating the bar objects about their shortest axis parallel to the table by 90 degrees, the objects will then be upright, and in an ideal position to be placed on top of the magnetic cubes. By repeating the process for each bar, this project will be significantly closer in its goal of constructing magnetic block structures.

Beyond the scope of the project that was initially proposed in this report, I would like to see the PR2 construct models out of these magnetic blocks purely based upon an input CAD model and a table full of blocks. This would truly demonstrate the ability of the PR2 to robustly manipulate objects in an unstructured environment.

Despite large amounts of effort to understand detection and manipulation on the PR2 robot, and subsequently implement an algorithm which relies on the default ROS implementations of both, this project was not successful in constructing magnetic structures. More simplifying assumptions such as color coded blocks to aid perception or relying on fixed block positions and orientations were necessary in order to complete this task. Ultimately, a great deal was learned about ROS, the PR2, and the strengths and weaknesses of the current implementations of perception and manipulation. With these lessons taken to heart, this project will move forward in continuing to implement magnetic structure construction for the PR2 robot.

References

[1]C. Ott, B. Baeuml, C. Borst, and G. Hirzinger,"Autonomous Opening

of a Door with a Mobile Manipulator: A Case Study,” in 6th IFAC

Symposium on Intelligent Autonomous Vehicles (IAV), 2007.

[2]K. Hsiao, S. Chitta, M. Ciocarlie, and E. G. Jones. Contact-reactive

grasping of objects with partial shape information. In Workshop on

Mobile Manipulation, ICRA, 2010

[3]"PR2: Hardware Specs" (2010). Available: http://www.willowgarage.com/pages/pr2/specs

[4]P. Bouffard, "ROS Concepts." (2011). Avaliable: http://www.ros.org/wiki/ROS/Concepts

[5]Saxena, A.; Driemeyer, J.; and Ng, A. Y. 2008. Robotic grasping

of novel objects using vision. IJRR 27(2):157–173