Hoeffing

Learning guarantees

How sure can you be in your estimate, supposing you still believe your model? The following bound (based on the Hoeffding inequality) will be very useful later in the course:

{$P(|\hat\theta_{MLE} - \theta^*| \ge \epsilon)\le 2e^{-2n\epsilon^2,}$}

where {$\theta^*$} is the true parameter and {$\epsilon > 0$}.

In words: The probability of the observed proportion of heads deviating by {$\epsilon$} from the true proportion in an i.i.d. sample of n tosses decreases exponentially with n and {$\epsilon$}. So, how much data do I need if I want to be 95% sure that I guessed the proportion plus/minus 0.1? We can solve for n as follows:

{$ \; \begin{align*} P(|\hat\theta_{MLE} - \theta^*| \ge \epsilon) & \le (1 - 0.95) \\ \rightarrow 1 - 0.95 & = 2e^{-2n*0.1^2} \\ n & = \frac{\log \frac{2}{0.05}}{2*0.1^2}\approx 185. \end{align*} \; $}

Here and below, log is the natural log. In general, if I want {$1-\delta$} confidence with {$\epsilon$} (absolute) error, I need:

{$n \ge \frac{\log \frac{2}{\delta}}{2\epsilon^2}.$}

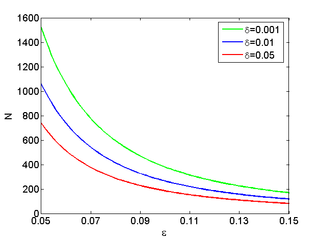

Here are the required number of independent tosses for several values of confidence {$\delta$}:

Guarantees of this kind are what the Probably Approximately Correct (PAC) framework aims to derive for learning algorithms, where the key goal is polynomial or better dependence of n (sample complexity) on other factors. For more detail than we'll cover, see the page on PAC learning. Back to Lectures