|

Lectures /

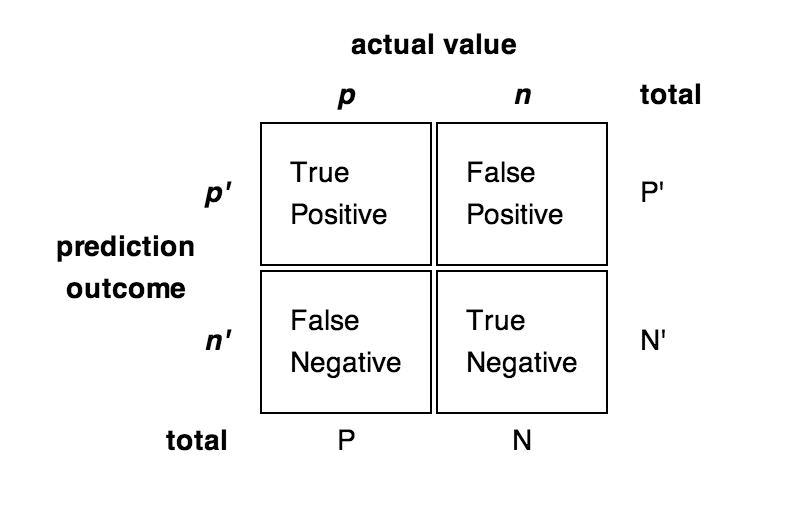

Precision Recall Performance measuresaccuracy (ACC)

precision = positive predictive value (PPV)

recall = sensitivity = true positive rate (TPR)

false positive rate (FPR)

specificity (SPC) = True Negative Rate

negative predictive value (NPV)

false discovery rate (FDR)

F1 score = harmonic mean of precision and recall

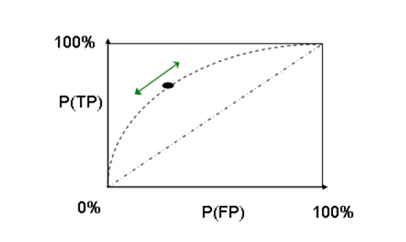

ROC CurvesOrder the predicted examples from highest score (highest probability of have a label of “yes”) to lowest score. As one moves down the list, including more examples, the number of true positives will at first increase rapidly, and then more slowly. One can view the x-axis as the number of items we label as “yes” and the y-axis as the number of those items that really were “yes”. Or you can normalize them to run from 0 to 100%.  Random guessing will give a 45 degree line on this plot. The higher the ROC curve is, the more accurate (on average) the prediction is. Thus, one can measure quality of the model using the area under the curve (AUC). AUC=0.5 is random guessing, AUC=1.0 is perfect prediction. |